Our last presentation from Day 2 of Network Field Day 5 came from a relatively new company – Plexxi. I hadn’t really heard much from them before they signed up to present at NFD5. All I really knew was that they had been attracting some very high profile talent from the Juniper ranks. First Dan Backman (@jonahsfo) shortly after NFD4 then Mike Bushong (@mbushong) earlier this year. One might speculate that when the talent is headed in a certain direction, it might be best to see what’s going on over there. If only I had known the whole story up front.

Mat Mathews kicked off the presentation with a discussion about Plexxi and what they are doing to differentiate themselves in the SDN space. It didn’t take long before their first surprise was revealed. Our old buddy Derick Winkworth (@cloudtoad) emerged from his pond to tell us the story of why he moved from Juniper to Plexxi just the week before. He’d kept the news of his destination pretty quiet. I should have guessed he would end up at a cutting edge SDN-focused company like Plexxi. It’s good to see smart people landing in places that not only make them excited but give them the opportunity to affect lots of change in the emerging market of programmable networking.

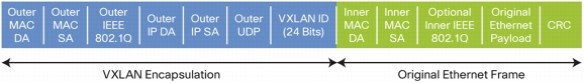

Marten Terpstra jumped in next to talk about the gory details of what Plexxi is doing. In a nutshell, this all boils down to affinity. Based on a study done by Microsoft in 2009, Plexxi noticed that there are a lot of relationships between applications running in a data center. Once you’ve identified these relationships, you can start doing things with them. You can create policies that provide for consistent communications between applications. You can isolate applications from one another. You can even ensure which applications get preferential treatment during a network argument. Now do you see the SDN applications? Plexxi took the approach that there is more data to be gathered by the applications in the network. When they looked for it, sure enough it was there. Now, armed with more information, they could start crafting a response. What they came up with was the Plexxi Switch. This is a pretty standard 32-port 10GigE switch with 4 QSFP ports.. Their differentiator is the 40GigE uplinks to the other Plexxi Switches. Those are used to create a physical ring topology that allows the whole conglomeration to work together to create what looked to me like a virtual mesh network. Once connected in such a manner, the affinities between the applications running at the edges of the network can now begin to be built.

Plexxi has a controller that sits above the bits and bytes and starts constructing the policy-based affinities to allow traffic to go where it needs to go. It can also set things up so that things don’t go where they’re not supposed to be, as in the example Simon McCormack gives in the above video. Even if the machine is moved to a different host in the network via vMotion or Live Migration, the Plexxi controller and network are smart enough to figure out that those hosts went somewhere different and that the policy providing for an isolated forwarding path needs to be reimplemented. That’s one of the nice things about programmatic networking. The higher-order networking controllers and functions figure out what needs to change in the network and implements the changes either automatically or with a minimum of human effort. This ensures that the servers don’t come in and muck up the works with things like Dynamic Resource Scheduler (DRS) moves or other unforeseen disasters. Think about the number of times you’ve seen a VM with an anti-affinity rule that keeps it from being evacuated from a host because there is some sort of dedicated link for compliance or security reasons. With Plexxi, that can all be done automagically. Derick even showed off some interesting possibilities around using Python to extend the capabilities of the CLI at the end of the video.

If you’d like to learn more about Plexxi, you can check them out at http://www.plexxi.com. You can also follow them on Twitter as @PlexxiInc

Tom’s Take

Plexxi has a much different feel than many of the SDN products I’ve seen so far. That’s probably because they aren’t trying to extend an existing infrastructure with programmability. Instead, they’ve taken a singular focus around affinity and managed to tun it into something that looks to have some very fascinating applications in today’s data centers. If you’re going to succeed in the SDN-centric world of today, you either need to be front of the race as it is being run today, like Cisco and Juniper, or you need to have a novel approach to the problem. Plexxi really is looking at this whole thing from the top down. As I mentioned to a few people afterwards, this feels like someone reimplemented QFabric with a significant amount of flow-based intelligence. That has some implications for higher order handling that can’t be addressed by a simple fabric forwarding engine. I will stay tuned to Plexxi down the road. If nothing else, just for the crazy sock pictures.

Tech Field Day Disclaimer

Plexxi was a sponsor of Network Field Day 5. As such, they were responsible for covering a portion of my travel and lodging expenses while attending Network Field Day 5. In addition, they also gave the delegates a Nerf dart gun and provided us with after hours refreshments. At no time did they ask for, nor where they promised any kind of consideration in the writing of this review. The opinions and analysis provided within are my own and any errors or omissions are mine and mine alone.

Additional Coverage of Plexxi and Network Field Day 5

Smart Optical Switching – Your Plexxible Friend – John Herbert