One of the major advantages to owning an iPad, or in some cases an iPhone, is that you have a mobile computer at your fingertips that is quite easy to carry around the datacenter or networking closet. I have an iPad myself, and I find it very useful for documentation purposes. Whether it be taking notes about the configuration of a specific device or looking up the PDF of a particular feature from Cisco’s website, the iPad has many uses. However, if I find myself in need of connecting to a device such as a switch or a router, my iPad/iPhone options are limited. I can use a telnet or SSH client to remote into the system, but if I don’t know the management IP or the username/password combination I can be sunk. Or worse yet, if the switch has never been properly configured for remote access it becomes a moot point. If I want to be able to use my trusty Cisco rollover console cable to get into the switch the old fashioned way, I have to lug out my behemoth Lenovo W701 laptop and get it ready, which can be quite an endeavor depending on the amount of room I have to work with or the amount of time that I’m going to spend consoled in, since my laptop has about 1.5 hours of battery life under the best of circumstances. Add in the difficulties that I’ve faced with USB-to-serial adapters under Windows 7 64-bit and you can see why I’m reluctant to use the console. However, there is hope for the best of these two worlds.

A company called Redpark has started selling a rollover cable with a 30-pin iDevice connector. Engadget had a story about it HERE. Naturally, I decided that I just had to have one of these. You know…for work and stuff. Anyway, I jumped right over to the Redpark website. Hello sticker shock. This baby is going to set you back a cool $69. Add in more if you want shipping and handling (whatever that is), so expect to shell out about $80 to get it to your neck of the woods, more if you need to have one tomorrow. That’s not all, folks! Even if you do manage to get your hands on one of these little jewels, you still need an app to access the console. Now those of you that looked at this excellent blog post by Ruhann about console access on a jailbroken iPad are all set. The rest of us poor saps that haven’t jailbroken our iPads yet are in a bit of a lurch. Fear not, because the company also has an official app on the App Store called Get Console (or Cisco Console Companion) that will give you console access. For a measly $9.99. After all, you’ve already spent $80 already, what’s a few dollars more?

Once my console cable arrived in the mail, I was a little underwhelmed by the packaging:

Not much to look at. The contents of the box were even worse. The console cable lovingly encased in bubble wrap, and this instruction sheet:

Bravo for making it straightforward and easy to read. Off to the App Store to download my new app. Except…”Cisco Console Companion” isn’t the official title of the app. It’s “Get Console”, along with a big disclaimer that it is in no way associated with Cisco. I’m guessing they had to use an alternate title in the app store because of some wonky trademark issues that Uncle John wasn’t too pleased about. At any rate, it was a fast download and then I was off and running.

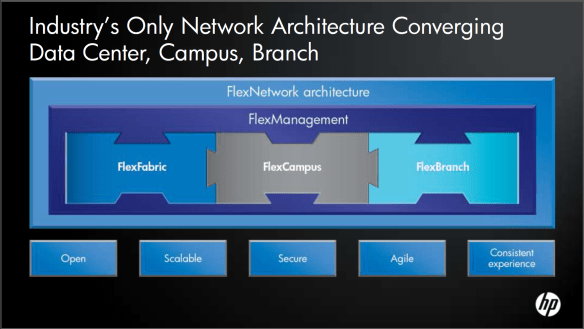

For the purposes of this test, I’m consoling into a Cisco Catalyst 3560 8-port switch. Once I fired up the program, it popped up with a one-time reminder that it was only for Cisco devices and that it would check each device to ensure that it was a genuine Cisco product. My best guess is this is there to prevent people from trying to use it as an Ethernet cable or something, because most reports I’ve seen says that it works just fine with any kind of device that uses a rollover cable, like Juniper, or HP, or what have you. I didn’t test this out during my first run, but I will be testing it down the road of some of those devices. Note that since it is an RJ-45 rollover cable, it can’t be used on RS-232 or null modem devices. Oh well, time to upgrade those old switches anyway. The cable itself feels rather thin, almost like a fiber patch cable rather than a flat rollover cable or even a UTP cable. It’s about 6 feet long, so you don’t have to be right next to the device you’re trying to console into, but don’t expect to be programming from across the room. Here’s a picture of the cable on top of my test switch:

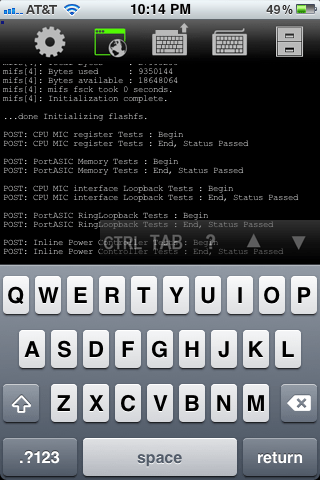

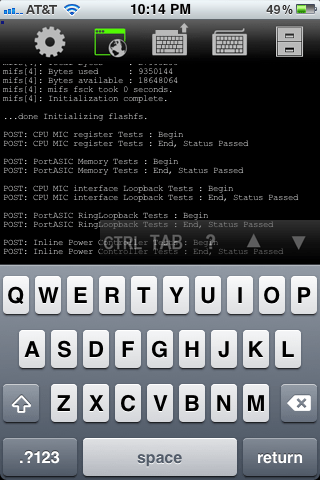

My first encounter with the Get Console program led me to this screen:

Fairly utilitarian, but that’s fine by me. I’m not really a “bells and whistles” kind of guy. The bottom section of the screen is dominated by the on-screen keyboard, but that’s to be expected. Just above that is a collapsible keyboard bar that lists some very useful control keys. First is the all-important TAB key, which I’ve found sorely lacking on some of the telnet clients I’ve used. TAB saves me a ton of time. Next is the CTRL key, which when tapped toggles on and allows you to use CTRL+ shortcuts for moving around the command line or sending a CTRL+C or CTRL+Z to end. Next is the BRK key, which sends an immediate break signal to the console. Useful for those times when you need to enter ROMMON on bootup. Next is everyone’s favorite question mark key. Having it here is really helpful so that I don’t have to waste a keystroke getting to the number/symbol keyboard on the iPad. This is followed by the up and down error keys, which are used to cycle through your command history forward and backward. Lastly is a Return key, which I didn’t really use, since the iPad keyboard has one built in.

The upper right corner of the app replicates many of the same keys as the collapsible keyboard, along with a paper clip icon. When you tap this, it pulls out a drawer that contains the contents of the clipboard. You can paste those contents directly onto the command line. So if you find yourself typing the same commands in over and over, this is a handy shortcut (there are others we’ll get to in a second). As a quick note, while you can type in this clipboard, if you don’t copy the contents before pasting it will simply paste what was in the box before. So be sure to copy before you paste.

The upper left includes the Settings button, the session button, the keyboard show/hide button, a button to show/hide the collapsible keyboard with the TAB and CTRL keys, and a file drawer for storing config files. The settings button is very feature rich. You can choose to have the program automatically connect when it launches or wait for you to connect manually. There are also settings to change the baud rate and stop bits, which really helps when you are connecting to some non-standard gear. You can have the system log all of your console sessions, which can be stored in the filing cabinet for later examination. You can change the number of columns and rows, as well as the amount of scrollback in the window. Be aware that adding too many columns will mean you need to scroll the screen left or right to see the output, as it looks like the main window is about 80 columns wide. You can change the bell that dings when you do something you aren’t supposed to, as well as changing the color scheme to something other than white-on-black text. The font size slider doesn’t correspond to actual point sizes, so you might need to play around with it to find a comfortable setting.

The session button allows you to disconnect a console session manually as well as offering one of the added benefits of this program. By signing up at http://www.get-console.com, you can add an option under settings to connect to a remote console server at that website. You can then tap the session button and obtain a 7-digit access code that allows someone to access your console session from the Get Console webpage. This is fairly handy if you have a junior administrator on site and need to walk them through a configuration. Or if that same junior admin is in a network that is down, you can use a 3G iPad to connect to their console session and do some troubleshooting. I had to play around with the settings in order to test this feature. It looks like the app connects to the remote console server when you choose to share the session, and the access code allows the user on the website to connect in like a type of reverse telnet connection. I couldn’t get the app to connect using the North America servers, but the Europe and Asia servers worked just fine. However, the latency on these connections was pitiful. Redraw on my screen could be measured in seconds. I tried entering some commands on the webpage, but careful typing was enough to overrun the keyboard buffer for the app. And if you’re going to try and look at live debugs, you might as well forget about it. By the time you could send a break or “un all”, you’d be swamped in messages. Better to use the web app as a mirroring device for training or for simple troubleshooting. You can also choose to encrypt the sessions if you want, which is a pretty good idea if you don’t want everyone on the Internet up in your business.

The filing cabinet is another interesting piece. By uploading configs to the Get Console website, you can store them in your filing cabinet to copy onto the device locally. That way, if you have a template for your switches, you don’t need to worry about copying and pasting it out of an e-mail, where it may get buggered up by some strange formatting issues. You can also have those pesky junior admins share an account and copy the configs to the filing cabinet for them, so all they have to do is walk out and plug in to setup the switch with enough config for you to be able to telnet to it. There is local shortcut storage as well, so you can keep some of your more clever commands on your own iPad safe from those that could use them to do harm. You can also store console logs for later upload or email.

Out of the box, the font size was downright tiny. I had to bump the slider up to about 3/4ths of the way just to read it comfortably, and I was holding the iPad less than a foot from my face. The keyboard was quite responsive, and the scrolling of the information was smooth and easy to follow. The app is setup to beep at you when you try to use a key that isn’t supported, such as a down arrow at the prompt when there are no more commands to replay. This feature is nice because it gives some feedback so you know when you’re beating your head against a brick wall.

In case you’re curious, this app is universal for both iPad and iPhone/iPod Touch. But other than just glancing at the console I’m not sure how useful it’s going to be. There isn’t much screen real estate to start with, and all the extra pieces don’t give you much room to look at things. Here’s a screen shot to give you and idea of what I’m talking about:

Tom’s Take

It all comes down to money. Is there enough utility in this cable and app for you to justify spending $100 on it? Do you often find yourself in a network room with only your iPad and a switch that won’t respond to any other method of input? I wouldn’t dream of trying to do any kind of heavy duty debugging on this device. I’d rather have my full laptop with multiple apps and notepad windows to drag around to interpret console spam. As well, any kind of programming that would require lots of time at the keyboard would probably get uncomfortable after a while, unless you’re one of those people that happens to like typing on the iPad on-screen keyboard. I suppose you could haul along a wireless keyboard, but at that point you’re dragging along an awful lot of devices for simple console access.

I could see this being a useful tool for training or for an emergency tool kit. Throw an iPad and a cable in your kit and you have instant access to the console of a device from anywhere in the world. You could send the less-skilled network admins out on site and a more senior person could stay in the office and do some simple troubleshooting or configuration in order to get to the equipment through SSH or telnet. The web piece, in my mind, is just too unresponsive to spend a lot of time on. Plus, if you are fast typist like I am, you’re going to get rather frustrated with the delay in command execution, if you don’t outright lock the system up with all the characters you’re throwing at it.

The app does what it says, there’s no denying that. I find it very useful to have on my iPad and I’ll probably use it going forward for many of my walkthroughs and audits. However, I think the $100 price tag is a little steep for something like this. I hope that the price of the console cable will come down at some point, because $69 dollars for this is a bit of a stretch, even by Apple standards. If there is enough demand, we may even see some other vendors get into the market and offer something like this. If that happens, hopefully the Get Console people will support them as well. I had hoped that maybe the software people could offer a gift card with the purchase of the cable, but I believe that they are two different companies so that’s probably out of the question. Redpark could always throw in a $10 iTunes gift card if the want to soften the blow of needing the additional app to use the cable, but marketing isn’t my department.

All in all, I think I’m going to be able to find some use out of this app. However, you really need to think twice about whether or not a C-note is worth giving up for this type of functionality. If you want to learn more about these products, you can check out the console cable at http://redpark.myshopify.com/products/console-cable and you can check out the software program at http://www.get-console.com/