I’m not a wireless engineer by trade. I don’t have a lab of access points that I’m using to test the latest and greatest solutions. I leave that to my friends. I fall more in the camp of having a working wireless network that meets my needs and keeps my family from yelling at me when the network is down.

Ubiquitous Usage

For the last five years my house has been running on Ubiquiti gear. You may recall I did a review back in 2018 after having it up and running for a few months. Since then I’ve had no issues. In fact, the only problem I had was not with the gear but with the machine I installed the controller software on. Turns out hard disk drives do eventually go bad and I needed to replace it and get everything up and running again. Which was my intention when it went down sometime in 2021. Of course, life being what it is I deprioritized the recovery of the system. I realized after more than a year that my wireless network hadn’t hiccuped once. Sure, I couldn’t make any changes to it but the joy of having a stable environment is that you don’t need to make constant changes. Still, I was impressed that I had no issues the necessitated my recovery of my controller software.

Flash forward to late 2023. I’m talking with some of the folks at Ubiquiti about a totally unrelated matter and I just happened to mention that I was impressed at how long the system had been running. They asked me what hardware I was working with and when I told them they laughed and said I needed to check out their new stuff. I was just about to ask them what I should look at when they told me they were going to ship me a package to install and try out.

Dreaming of Ease

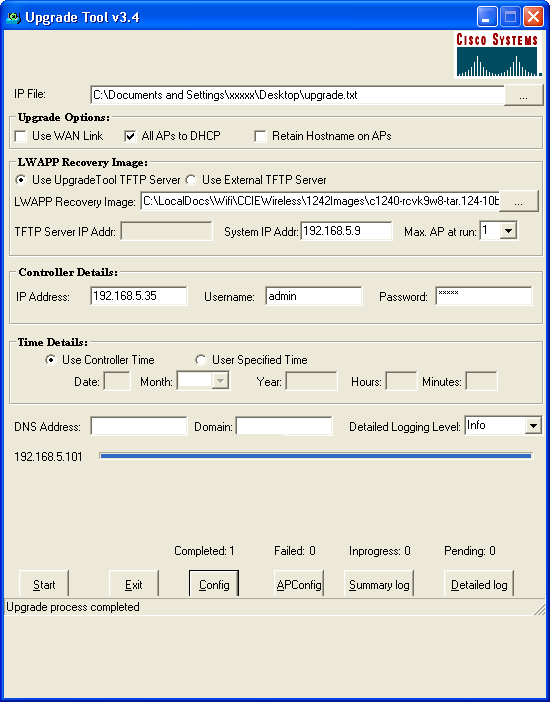

Tom Hildebrand really did a great job because I got a UPS shipment at the beginning of December with a Ubiquiti Dream Machine SE, a new U6 Pro AP, and a G5 Flex Camera. As soon as I had the chance I unboxed the UDM SE and started looking over the installation process. The UDM SE is an all-in-one switch, firewall, and controller for the APs. I booted the system and started to do the setup process. I panicked for a moment because I realized that my computer was currently doing something connected to my old network and I didn’t want to have to dig through the pile to find a laptop to connect in via Ethernet to configure things.

That’s when my first surprise popped up. The UDM SE allows you to download the UniFi app to your phone and do the setup process from a mobile device. I was able to configure the UDM SE with my network settings and network names and get it staged and ready to do without the need for a laptop. That was a big win in my book. Lugging your laptop to a remote site for an installation isn’t always feasible. And counting on someone to have the right software isn’t either. How many times have you asked a junior admin or remote IT person what terminal program they’re using only to be met with a blank stare?

Once the UDM SE was up and running, getting the new U6 AP joined was easy. It joined the controller, downloaded the firmware updates and adopted my new (old) network settings. Since I didn’t have my old controller software handy I just recreated the old network settings from scratch. I took the opportunity to clean out some older compatibility issues that I was ready to be rid of thanks to an old Xbox 360 and some other ancient devices that were long ago retired. Clean implementations for the win. After the U6 was ready to go I installed it in my office and got ready to move my old AP to a different location to provide coverage.

The UDM SE detected that there were two APs that were running but part of a different controller. It asked me if I wanted to take them over and I happily responded in the affirmative. Sadly, when asked for the password to the old controller I drew a blank because that was two years ago and I can barely remember what I eat for breakfast. Ubiquiti has a solution for that and with some judicious use of the reset button I was able to reset the APs and join them to the UDM SE with no issues. Now everything is humming along smoothly. The camera is still waiting to be deployed once I figure out where I want to put it.

How is it all working? Zero complaints so far. Much like my previous deployment everything is humming right along and all my devices joined the new network without complaint. All the APs are running on new firmware and my new settings mean fewer questions about why something isn’t working because the kids are on a different network than the printer or one of the devices can’t download movies or something like that. Given how long I was running the old network without any form of control I’m glad it picked right up and kept going. Scheduling the right downtime at the beginning of the month may have had something to do with that but otherwise I’m trilled to see how it’s going.

Tom’s Take

Now that I’ve been running Ubiquiti for the last five years how would I rate it? I’d say for people that don’t want to rely on consumer APs from a big box store to run your home network you need to check Ubiquiti out. I know my friend Darrel Derosia is doing some amazing enterprise things with it in Memphis but I don’t need to run an entire arena. What I need is seamless connectivity for my devices without worry about what’s going to go down when I walk upstairs. My home network budget precludes enterprise gear. It fits nicely with Ubiquiti’s price point and functionality. Whether I’m trying to track down a lost Nintendo Switch or limit bandwidth so game updates aren’t choking out my cable modem I’m pleased with the performance and flexibility I have so far. I’m still putting the UDM SE through it’s paces and once I get the camera installed and working with it I’ll have more to say but rest assured I’m very thankful for Tom and his team for letting me kick the tires on some awesome hardware.

Disclaimer: The hardware mentioned in this post was provided by Ubiquiti at no charge to me. Ubiquiti did not ask for a review of their equipment and the opinions and perspectives represented in this post are mine and mine alone with not expectation of editorial review or compensation.